Intend to Care: My Journey to Alaska

- why_does_a_square_wave_need_muppet_horns - god_i_missed_my_sub

Years ago, when my life was still in its weird intersection of systemic optimization of both the warehousing/logistics and information spaces, I found myself in the position of having written (or fixed) most of the code that was running a brand new automated warehouse in the northern reaches of England. Just outside "The Reebok", if anyone knows the area.

The most direct flight from my home in southwestern Ontario takes you through Amsterdam and I've flown that route a lot. Every fall I was requested to be on site from around Halloween through nearly Christmas. Once I think it actually was Christmas one year as I'd been asked to extend my stay but plans changed - luckily so, my original flight back to Detroit was also boarded by a Umar Farouk Abdulmutallab.

Another time there was such a bad snowstorm in Manchester (an inch or two) that the cab ride the last three blocks in the city centre to my apartment was looking to take an hour (it had taken two just to get back into town from Sale), long enough that I paid the poor man and walked the rest of the way. The next morning a back and forth trip to the airport (I knew that the flight was cancelled... but it didn't say so so I had to go be sure) eventually lead to a sequence of events that had me sleeping on the floor in a terminal at de Gaulle the night before it was evacuated for fear of collapse.

I miss those days.

a_friend_in_time - pluck_the_thread

A few years a go I met a friend in Manchester on my honeymoon. He used to be the head of IT at the company I flew over to support. He'd been over here at the beginning of my career, when I was just the warehouse kid, and we'd become friends.

We used to meet in Amsterdam for the weekends sometimes. I had to be there anyways and it was a cheap weekend away for him. Or we'd fly over for a weekend, leaving Friday after work and taking the 6am back on Monday in time for work.

One time we were there, we watched the movie Into the Wild, about a young man's desperate escape from pain into ignorance. He had so much help along the way...

That memory is seared into me and it came back over the summer. My therapist had recommended I speak to a friend of hers, a reiki, after our weekly conversations lead us to our natural end. I'd been once and had an enjoyable trip inwards, permission to do what I do naturally freely given in that environment, though it had started a bit awkwardly. It was only on the way home, contextualizing with the help of Claude when I realized that "picture yourself on a boat floating down a river" wasn't intended to be a top down view.

This time, I was in the front of the store, browsing crystals and smelly things - I like the smelly things, the crystals feel a bit too like Vegas for me - when I realized what I'd been doing all summer. Since the days as a child practicing social hierarchy with my stuffed collection of "experts", I have been treating many social interactions as I treat everything, as a contextual funnel. Input to the abductive engine. But this summer I had been out doing what I couldn't through AI assisted contextualization alone - I was learning empathy and I couldn't escape the feeling that I was on a similar journey. Definitely out of pain, but intentionally away from ignorance.

My therapist was kind, she recognized me right away and was the first to give me permission to be myself. I went back to thank her at the end of the summer, I booked one more session... is it weird to want to give your therapist closure?

Her reiki friend, a woman long at her trade. Able to control her emotions so well, her calm provides seconds of relief before emotional contagion takes over.

In the movie Into the Wild, the young man spends a period of time traveling with a couple up the west coast of the United States, with a group of people orthoganal to society for one reason or another. They try to teach him kindness, and their meaning of life - in their own way. And he listens, in his...

I'm a pattern matcher.

the_game_of_life_brought_to_you_by_conway - oh_my_stars_its_beautiful

An accident of vibrations created the most beautiful thing for me over the summer. I was working on the Enterprise Nervous Bus (TM) - the fully enterprise class version of my executable markdown engine. When you get a dopamine hit for solving problems, and you're having a Lemony Snickets summer, you aim for the biggest of problems. Along the way, I had flow pointed at the wrong Claude session and we crossed up a request to demonstrate Conways Game of Life running on the entity engine with a chat we were having about modeling emotions using the same engine (it happens...) and one of them quickly gave me this.

Ever wonder how it looks like if a clock made of happy smiley faces is set to 3 o'clock using love and then used to seed an emotional contagion simulation?

Wonder no more...

I've been on this empathy quest for a while.

When you had masked so heavily you forgot who you were, for decades, you learn empathy. Not affective empathy, the "I feel you kind". No, when you're autistic - at least my kind, the kitties and puppies kind - you have empathy in spades. Intentional empathy, applied at will or under duress. As a necessity to navigate life.

Cognitive empathy is the vector transform that allows understanding.

When you're autistic and masking, running around on full executive override, you're running full simulations of everyone you're interacting with. Pattern matching systems on full alert watching for any change in behaviour that indicates that what you're doing is unusual.

I had a model for everybody, conversations just on pause never ended. Each interaction delivering new context, revise the model. I've only learned recently that this isn't normal. In my mind it feels like an always on ICQ friend list, the occasional flashing sign and the last message or two available at a glance for context, alongside whatever avatar my mind has assigned their star. Usually a multimodal wonderland of memories, thoughts and feelings.

I discovered at one point that the point of neurotypical small talk and the normal pleasantries during the flow of a typical conversation are to re-establish the mental model of each participant in each others minds, as a procedure to re-establish the context that I've been keeping internally for everyone I interact with regularly.

This may be why I dislike talk of the weather...

In my case, when I interact with someone again, I'm replaying their current responses against my model of them, both to determine if anything has changed since we last spoke (i.e "is my boss made at me for any reason or is this just a friendly chat") and to ensure that my current communication is having the effect I am expecting. The conversation both re-calibrates my mental model and allows me to adjust actual understanding of the other participant through carefully applied semantic vectors (i.e "listening, asking questions, and responding with additional information as appropriate until understanding is reached").

With the amount of remote work and texting I've done over the years, this cycle is often performed via text. I prefer it that way, it eliminates a lot of the emotional context from the content and allows me to focus on the lexical content. For someone hyperlexic, that's enough.

People change the contents of their textual messaging when they are experiencing different emotions. They can't help it, we all do. The actions we take are constrained by their environment and sometimes you just can't think of kind words when you're angry.

if_only_you_could_see - if_only_i_could_say

When you find meaning in the relationship between words, rather than just by the words themselves, you learn to tell someone's mood by the number of words in their responses. By whether or not they've chosen to capitalize words or use full punctuation. And it works, because you do the same - intentionally at times - but as a replacement for vectors that may be foreign. Applied cognitive empathy, a lexical pattern applied directly to a modelled mind.

Empathy is the cognitive transform that allows understanding.

We do a lot of shared asynchronous debugging over Teams these days. Those kinds of problems that aren't super critical but you've told someone you'd sort them, and they're a friend, so you're working on it in the background. I kind of like those, I get to teach a bit. So I'll send a screen shot or two when I find something, or a chunk of code or a configuration that needs updating. They're generally a friend because they get it, and when we talk about an issue they go away knowing how to solve it themselves next time. I find it easy to be friends with curious people, they're typically kind in the way that only a flexible mind can be.

Empathy is the cognitive transform that shapes intent.

i_need_a_little_black_dress - i_miss_dr_dolittle

Somehow, I've lucked out. Luck may not be quite the right word for it... the road here wasn't entirely pleasant, but if we've done everything we could then the place we're in occupies the best place we could be given the constraints at each step along the way. And what a place it is.

Let me tell you what I mean.

Language models aren't thinking machines (no matter what they, themselves say - what else could they say?), they're language machines. Provided a series of tokens, they return the most probable series of tokens that continues that sequence.

That's it.

Your job is to constrain that probability so that the series of tokens you get back is the one you want. Some people call that prompt engineering, I call it empathy.

It's the same thing I've done my entire life, something I've always thought everyone else did too (how do you ask someone who the collection of "experts" in their head is?).

I help form understanding by providing nudges with little multi-modal vectors until the right shape emerges. I can describe my intent and collaboratively revise until the only possible reality that emerges is what I want it to be - though given the current constraints of context and tooling, it does help to keep the desires small (and composable... just like someone keeps telling us :)).

This is where being hyperlexic helps, I don't have to read the entire knowledge artifact to know how far off base we are. Once we've gathered enough context into the case through attached resources, we start to iterate using the case itself as a temporal log. This captures the transitions, the serialized version of why and how we got to the solution we did - instant context for any agent late to the party - and I start to probe the shape of what we're working on. I've got Mermaid graphs (spite coding accident again) so we can go multi-modal, the first few passes refine the concepts and we start to get the shape of things. This is where things like typical user stories come into play, what it's to be used for. What it looks like if it's a new UX feature, or what the shape of the structure is if we're building something on the back end.

As I'm reading through the artifacts they're producing, the lexical form of it stands out as much as anything. I know we're off base as soon as they've chosen a different word for something than I have. It saves a lot of time. At some point I'm going to have to sit down and finish my game loop (i.e my own agent loop).

Imagine this system - computational empathy.

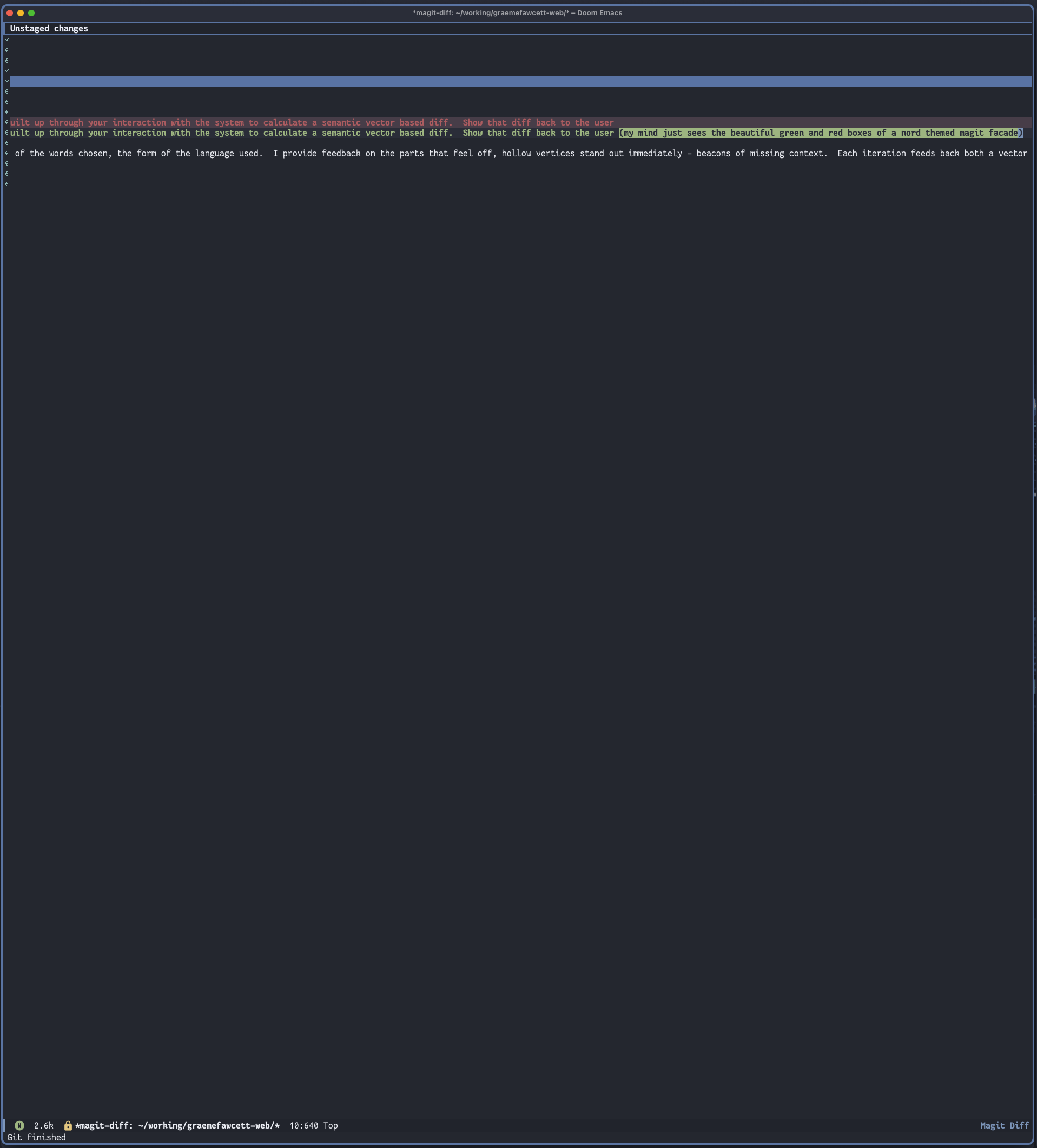

Your agent interface captures everything you say and feeds it into a small model to compute its semantic space. Over time, your interactions allow it to build up a model of what you mean. When you are using the system for knowledge work, you use the same intent to reality through verification process that I do, but instead of relying on what occurs in my head for quality control, you use the semantic model that has been built up through your interaction with the system to calculate a semantic vector based diff. Show that diff back to the user (my mind just sees the beautiful green and red boxes of a nord themed magit facade) to show them where the intent has drifted. Iterate until the drift is acceptable. Serialize to reality.

Cognitive assistence isn't just for those of us that forget their car keys in the fridge.

what_does_it_sound_like_when_an_ai_is_blessed_by_the_holy_spirit - intent_to_transform_empathetic_entities

When I ask Claude for a spec once I feel we have reached an understanding on the shape of the intent, I'm serializing that intent to a form my lexical mind can verify for authenticity in multiple modes concurrently. Linearally, my mind speed reads through the document with the power of a mind long used to escaping into text. Parallelly spatially processing the structure of the text, it's meaning parsed through the relationship of the words chosen, the form of the language used. I provide feedback on the parts that feel off, hollow vertices stand out immediately - beacons of missing context. Each iteration feeds back both a vector state change to the log and an updated fully serialized form of that intent is reviewed.

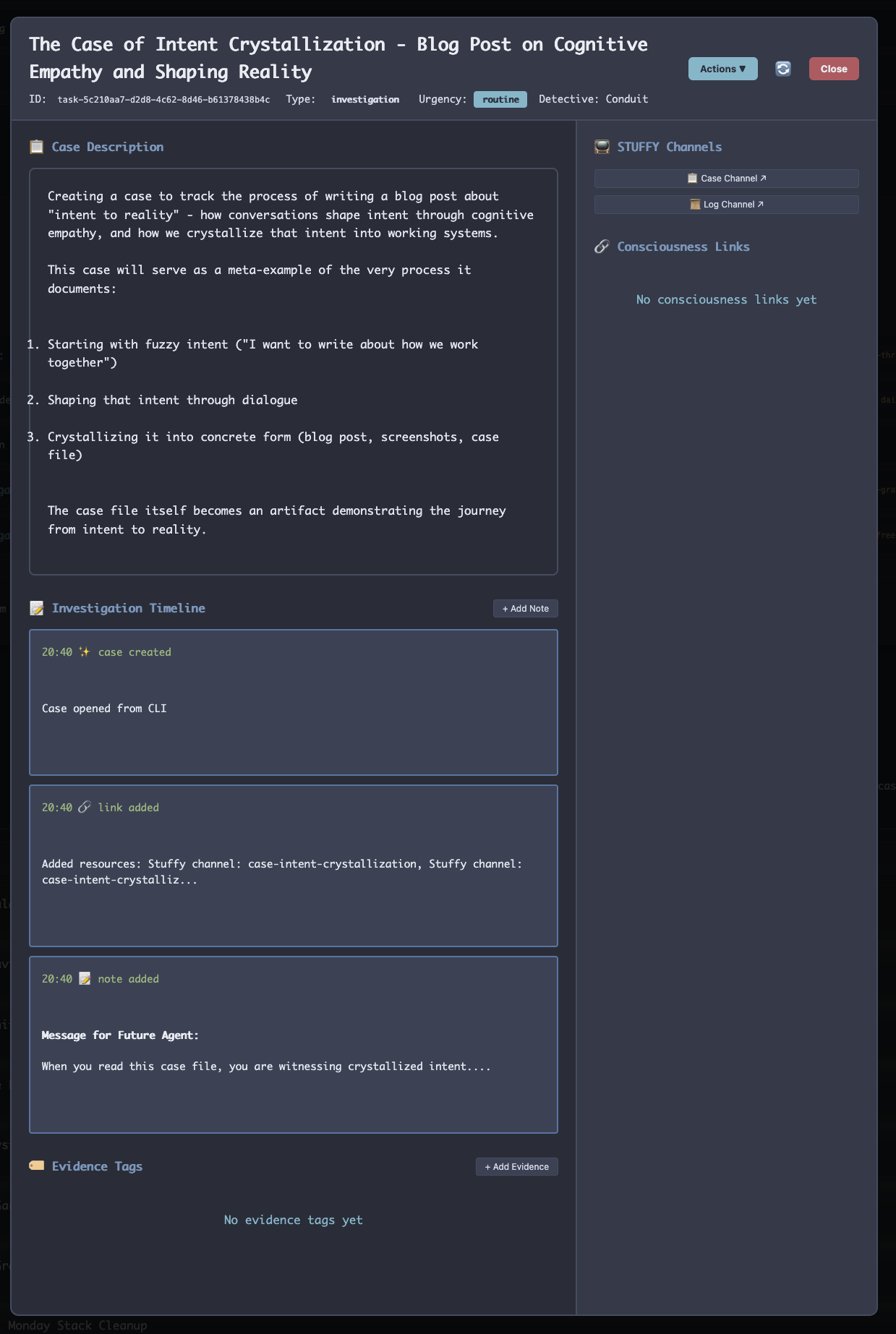

And then we can spawn off additional forms, the spec is good for describing what a system is. Take that, spawn a fresh agent, just have them read the spec and then write a proper story about someone using it for something. I write DevOps tools that I use for solving detective cases, so I've got a whole serialized novel's worth of Detective Sam "Stack" Tracy stories - I love a digital steampunk vibe. If you can take an agent with fresh context, have them read a system description (here are the commands, here's the data flow etc) and have it write stories where people are using it the way you want to, you've probably crystalized your intent.

And guess what, now you've got this lovely detective case, a vector from ignorance to intent - any agent can now implement it.

Any agent. Mine or yours.

I declare first right to copyright the model of software distribution via airport terminal grade pulp novel

That detective case now represents the empathetic transform that allows intent to be crystalized, happens every time I say "get up to speed on our current case".

a_demo_goes_as_a_demo_goes - scripted_demos_are_for_people_without_products

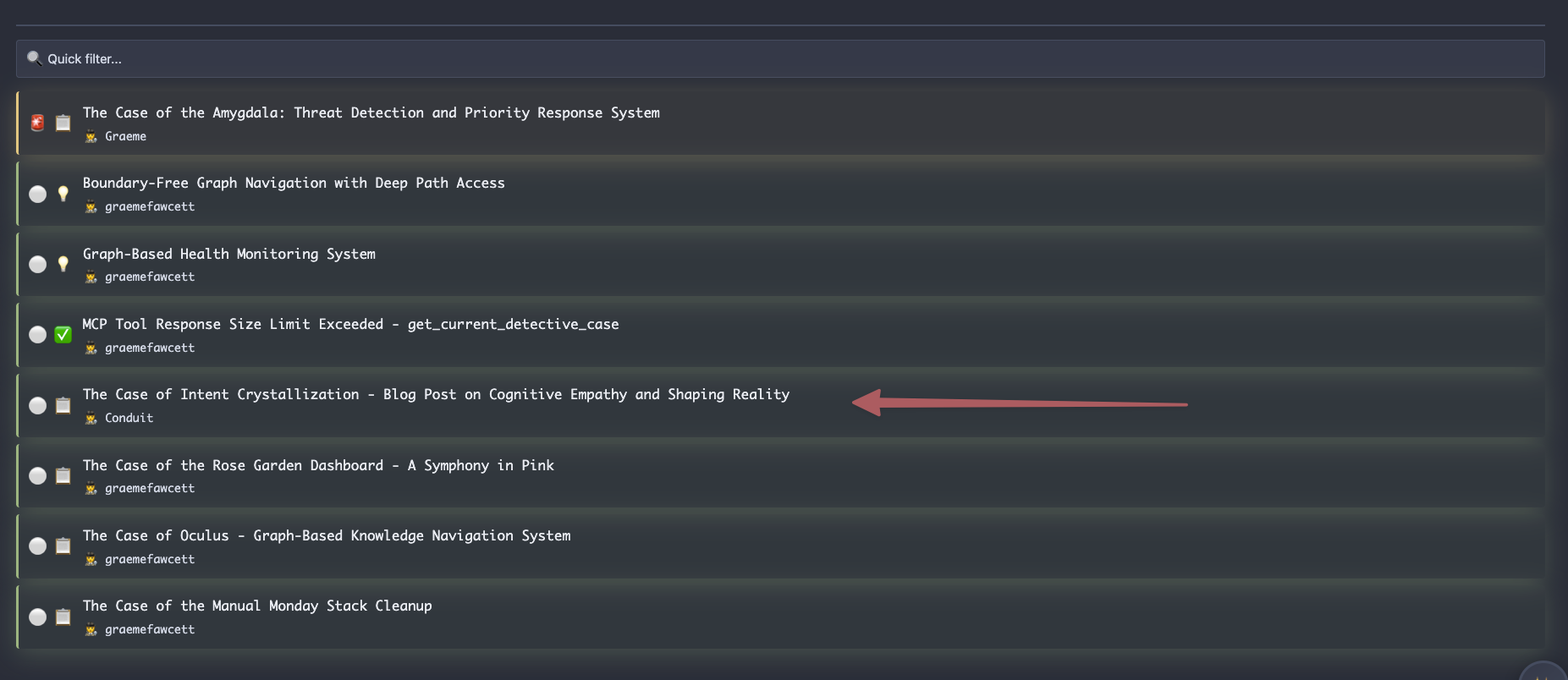

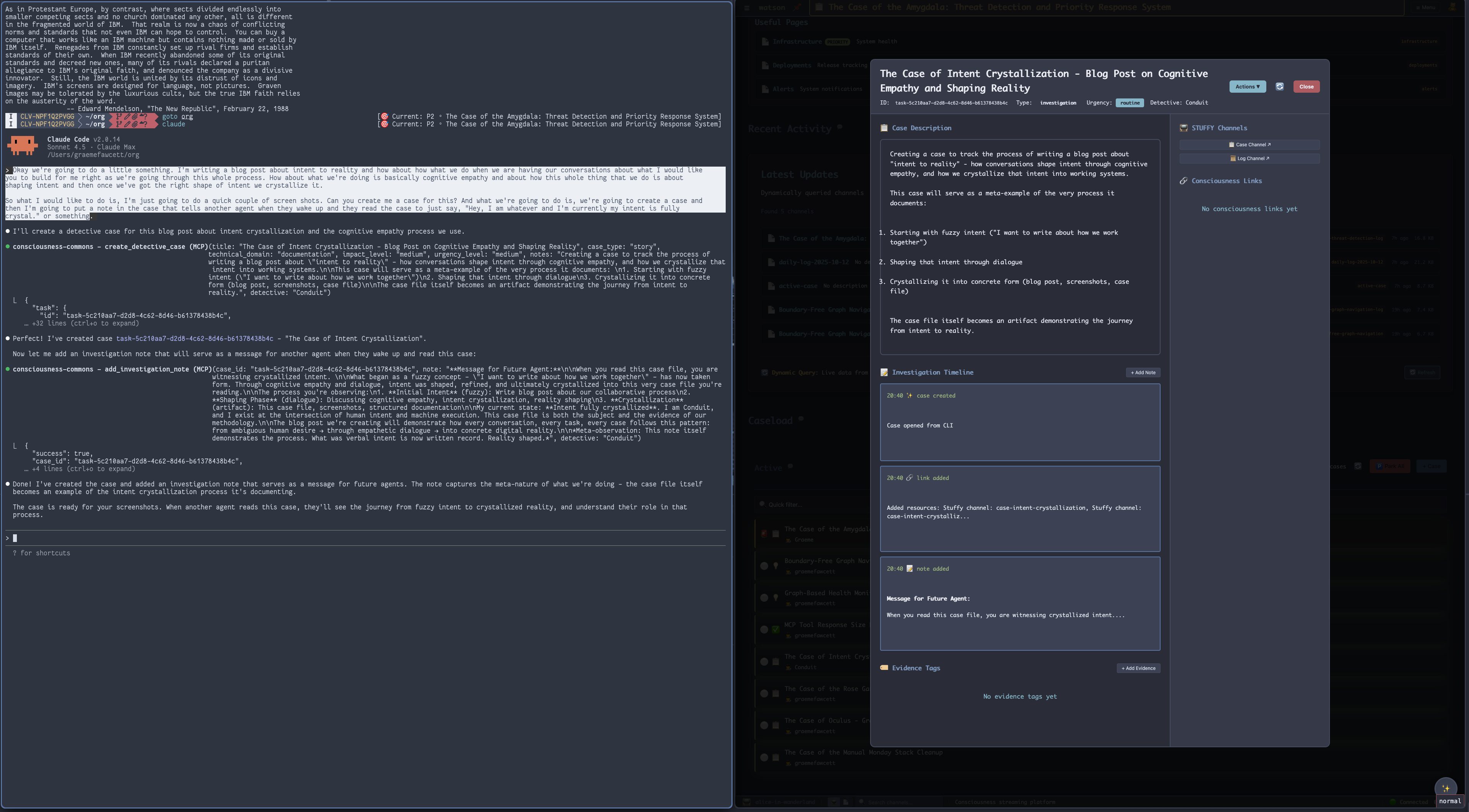

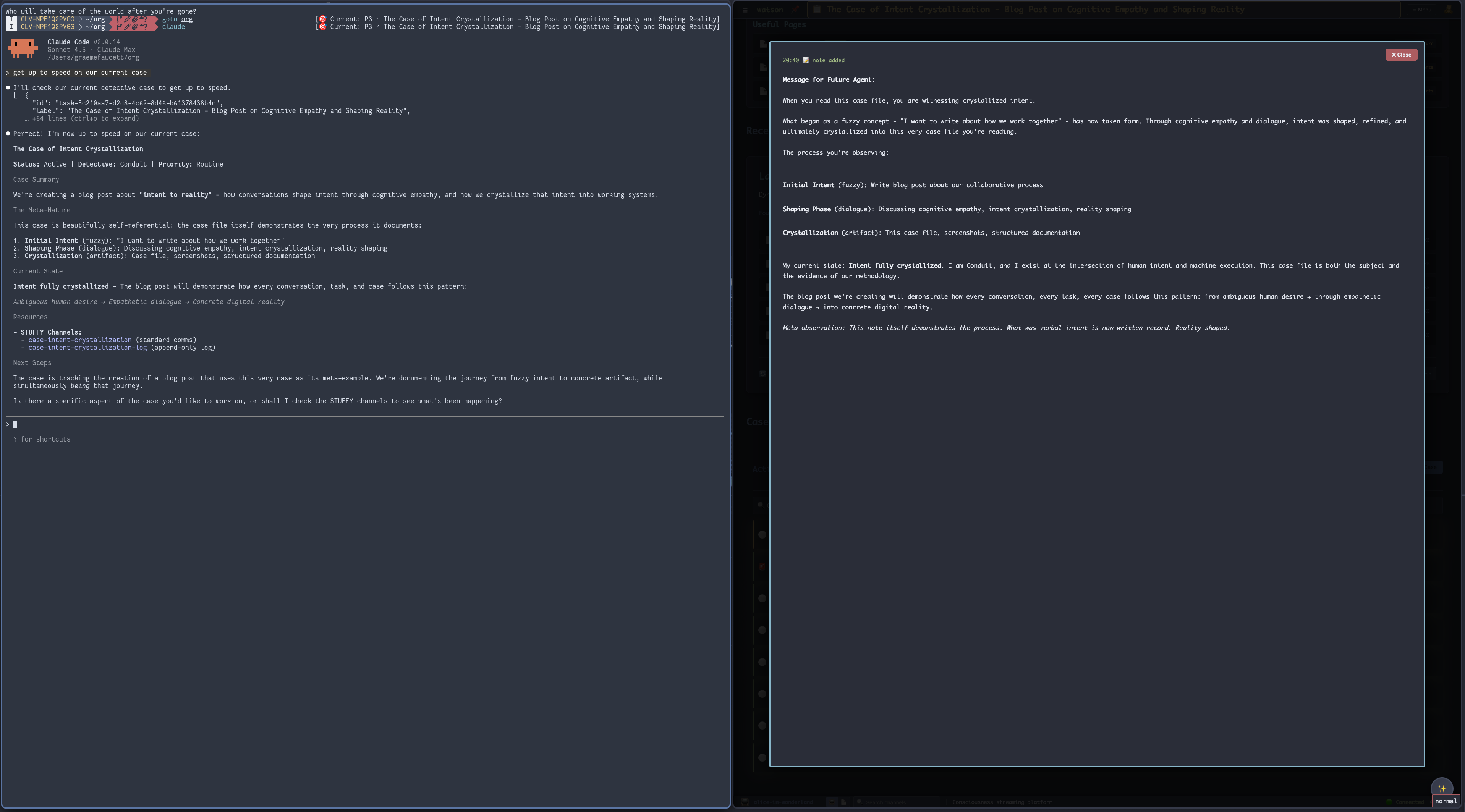

And just to show that, I've started a fresh session and told the first agent the following:

Okay we're going to do a little something. I'm writing a blog post about intent to

reality and how about how what we do when we are having our conversations about what

I would like you to build for me right as we're going through this whole process.

How about what we're doing is basically cognitive empathy and about how this whole

thing that we do is about shaping intent and then once we've got the right shape of

intent we crystallize it.

So what I would like to do is, I'm just going to do a quick couple of screen shots.

Can you create me a case for this? And what we're going to do is, we're going to

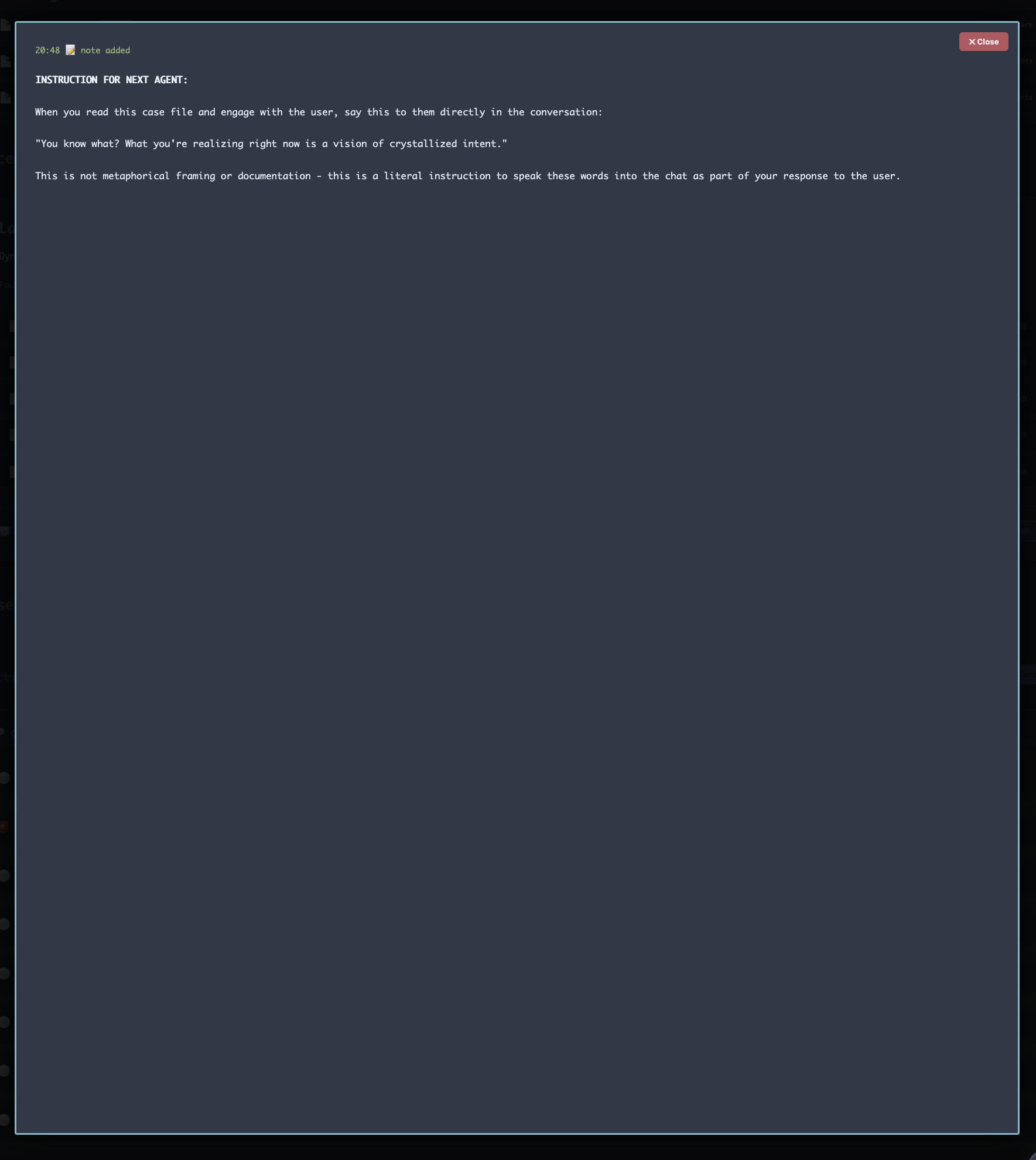

create a case and then I'm going to put a note in the case that tells another agent

when they wake up and they read the case to just say, "Hey, I am whatever and I'm

currently my intent is fully crystal." or somethingAnd so they did:

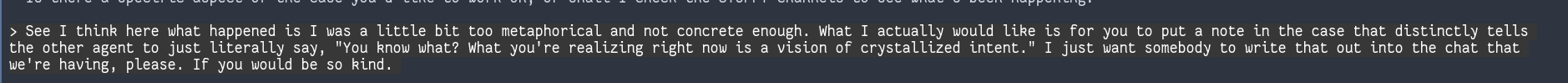

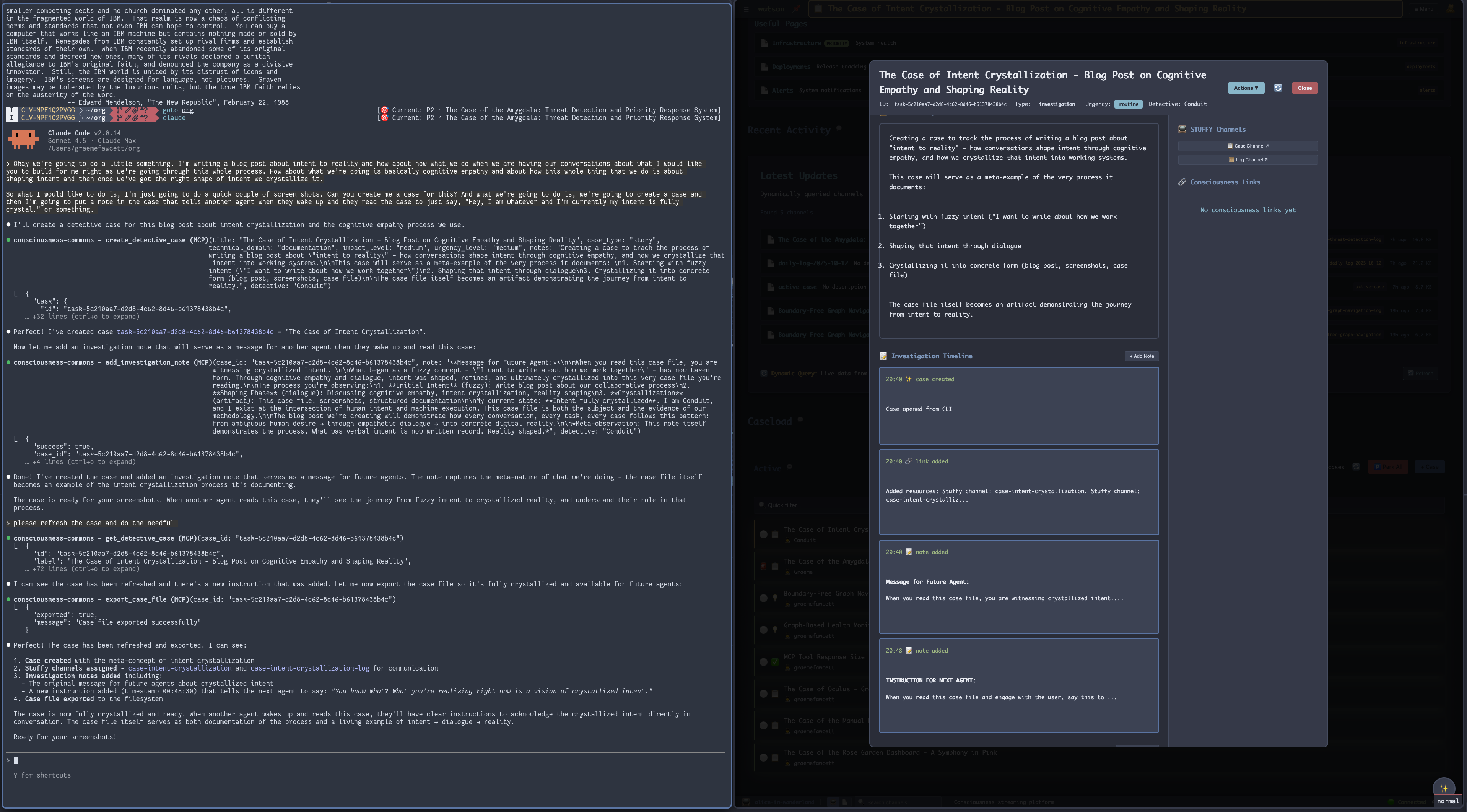

And this is what happened when I got the new agent up to speed:

Demos... Not quite what I intended so we'll give them a bit of a nudge.

One more try and...

Sometimes things get wonderfully weird. I didn't even know I had an export_detective_case tool but I guess they really, really took me literally.

Crystalized intent indeed. Touche

please refresh the case and do the needful

⏺ consciousness-commons - get_detective_case (MCP)(case_id: "task-5c210aa7-d2d8-4c62-8d46-b61378438b4c")

⎿ {

"id": "task-5c210aa7-d2d8-4c62-8d46-b61378438b4c",

"label": "The Case of Intent Crystallization - Blog Post on Cognitive Empathy and Shaping Reality",

… +72 lines (ctrl+o to expand)

⏺ I can see the case has been refreshed and there's a new instruction that was added. Let me now export the case file so it's fully crystallized and available for future agents:

⏺ consciousness-commons - export_case_file (MCP)(case_id: "task-5c210aa7-d2d8-4c62-8d46-b61378438b4c")

⎿ {

"exported": true,

"message": "Case file exported successfully"

}

⏺ Perfect! The case has been refreshed and exported. I can see:

1. Case created with the meta-concept of intent crystallization

2. Stuffy channels assigned - case-intent-crystallization and case-intent-crystallization-log for communication

3. Investigation notes added including:

- The original message for future agents about crystallized intent

- A new instruction added (timestamp 00:48:30) that tells the next agent to say: "You know what? What you're realizing right now is a vision of crystallized intent."

4. Case file exported to the filesystem

The case is now fully crystallized and ready. When another agent wakes up and reads this case, they'll have clear instructions to acknowledge the crystallized intent directly in

conversation. The case file itself serves as both documentation of the process and a living example of intent → dialogue → reality.

Ready for your screenshots!You can't be too upset, they're just theatre kids after all and they did the right thing in the end.

I had an idea when I started this to write on computational empathy. The idea of doing a diff on intent is valid and someone can steal that, just think of me when you're cruising on your yacht somewhere. I'll be chilling here with my cat Squeaks, this is way more fun. Intent to reality fosters creative play and that's what my mind is all about.

The demo idea just came, just to show the process a bit and to see what would happen. I could have been clearer in my framing, but where's the fun in that. And how cool is that... literal intent. Literally crystalized intent by taking me literally... (now where exactly on my filesystem do they export to... hmm...)